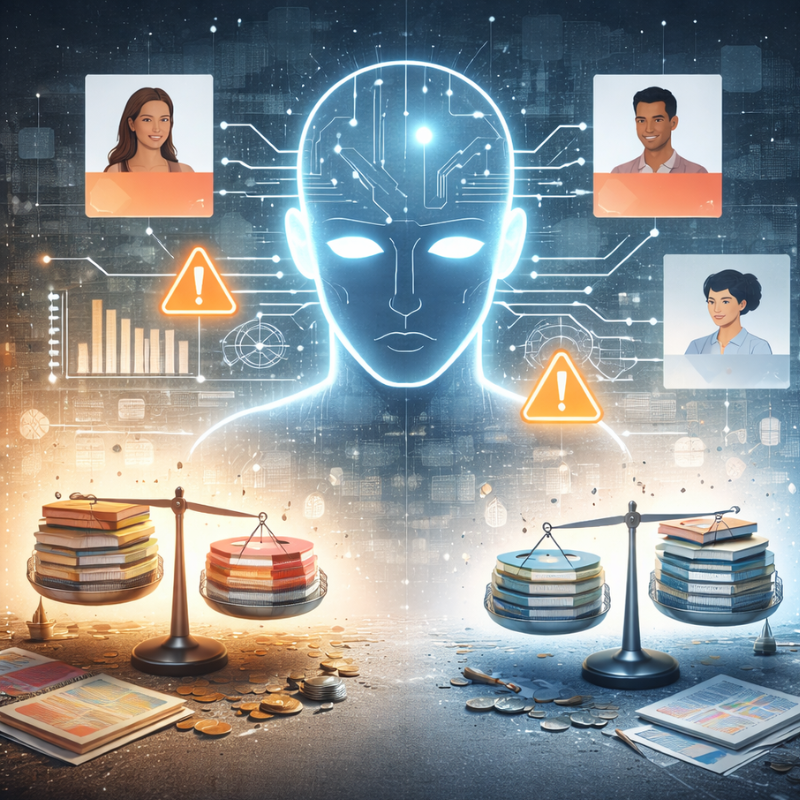

Artificial intelligence is increasingly trusted to assist with decisions that shape our daily lives. From recommending products and filtering job applications to approving loans and detecting fraud, AI-driven systems influence outcomes at scale. While these technologies promise efficiency and accuracy, they also carry a critical challenge—bias in AI.

Understanding how bias enters AI systems and how it affects decision-making is essential for anyone relying on data-driven technologies. Without careful oversight, biased AI can lead to unfair, inaccurate, or even harmful outcomes.

What Is Bias in AI?

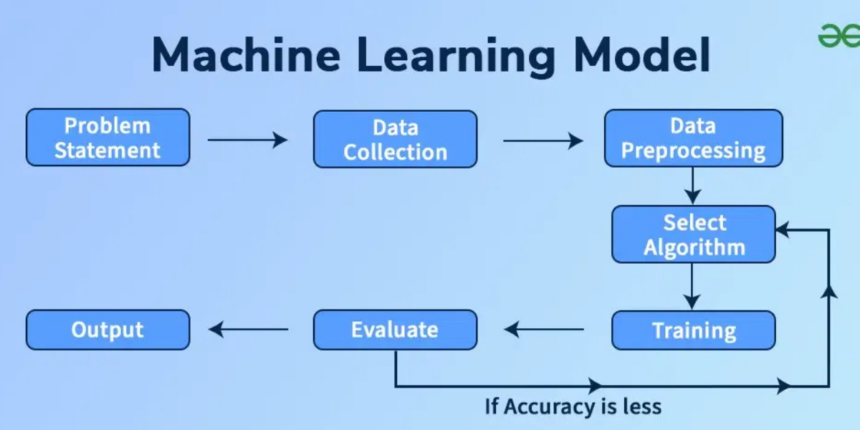

Bias in AI occurs when an artificial intelligence system produces systematically unfair or skewed results. This usually happens because AI models learn patterns from data, and that data often reflects existing human, social, or historical biases.

AI does not develop opinions on its own. Instead, it mirrors the data it is trained on and the assumptions built into its design. When these inputs are flawed or unbalanced, biased decision-making becomes a real risk.

How Bias Enters AI Systems

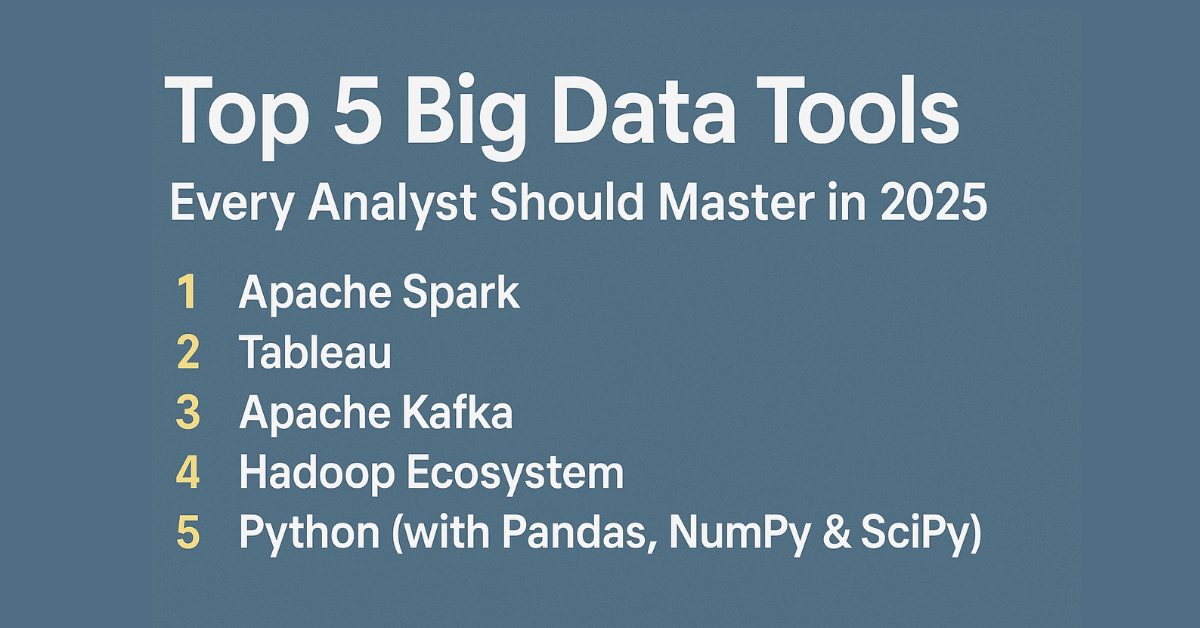

Bias can appear at multiple stages of the AI development lifecycle. One of the most common sources is biased training data. If a dataset lacks diversity or overrepresents certain groups, the AI will likely favor those groups in its predictions or decisions.

Another source of bias lies in how problems are defined. The choice of variables, labels, and evaluation metrics can unintentionally prioritize certain outcomes over others. Even well-intentioned developers can introduce bias if ethical considerations are not addressed early in the design process.

The Impact of Biased AI on Decision-Making

When AI systems influence decisions, bias can have real-world consequences. In hiring processes, biased algorithms may unfairly disadvantage qualified candidates. In finance, biased credit models can limit access to loans for specific demographics. In healthcare, biased data may lead to unequal treatment recommendations.

These outcomes not only affect individuals but also erode trust in technology. Decisions that appear objective can feel deeply unfair when people realize they are influenced by hidden biases. This is why understanding bias in AI is critical for responsible decision-making.

Why Bias Is Hard to Detect

One of the biggest challenges with AI bias is that it is often invisible. Complex algorithms operate behind the scenes, making it difficult for users to understand how decisions are reached. Unlike human bias, which can sometimes be identified through behavior, algorithmic bias is embedded in code and data.

Additionally, biased results may not be immediately obvious. An AI system can perform well overall while consistently disadvantaging a smaller group. Without careful testing and monitoring, these patterns can go unnoticed for long periods.

The Role of Data Quality and Diversity

High-quality, diverse data is one of the most effective ways to reduce bias in AI systems. Diverse datasets help AI models learn a wider range of patterns and avoid overfitting to a narrow perspective.

However, data diversity alone is not enough. Continuous evaluation, regular updates, and ethical review processes are necessary to ensure AI systems remain fair as real-world conditions change. Responsible decision-making requires ongoing attention, not a one-time fix.

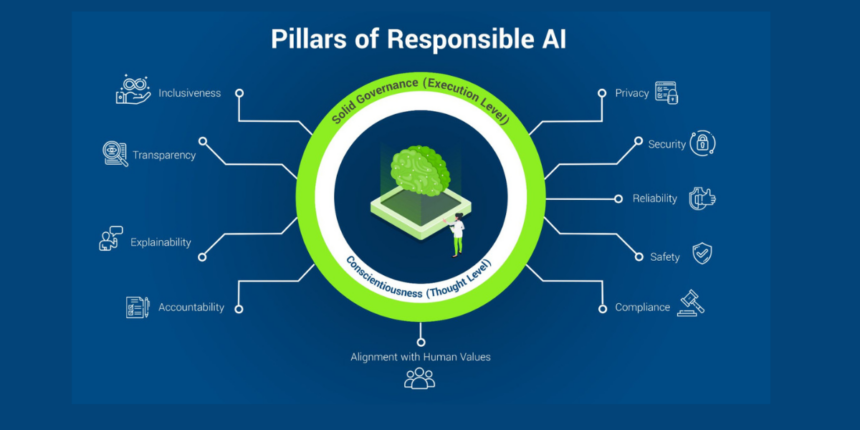

Transparency and Accountability in AI Decisions

Transparency plays a key role in addressing bias. When organizations understand how AI systems reach conclusions, they are better equipped to identify unfair outcomes and correct them.

Accountability is equally important. AI should support human decision-making, not replace responsibility. Clear governance structures ensure that when biased decisions occur, there is a process for review, explanation, and improvement.

Why Reducing AI Bias Matters for Businesses and Society

For businesses, biased AI can lead to reputational damage, legal risks, and loss of customer trust. Consumers are increasingly aware of how data-driven decisions affect them, and they expect fairness and transparency from technology-driven organizations.

From a societal perspective, unchecked AI bias can reinforce inequality and widen existing gaps. Ethical AI practices help ensure that innovation contributes positively to society rather than amplifying systemic problems.

Moving Toward Fairer AI Decision-Making

Reducing bias in AI requires a combination of technical solutions and ethical awareness. This includes diverse development teams, inclusive data practices, bias testing, and regular audits of AI systems.

Education also plays a vital role. The more organizations and users understand how AI works, the better equipped they are to question outcomes and demand responsible practices. Fair AI is not just a technical goal—it is a shared responsibility.

Final Thoughts

Bias in AI is a complex but solvable challenge. By understanding how bias affects decision-making, organizations and individuals can take meaningful steps toward building fairer, more trustworthy systems. Ethical awareness, transparency, and continuous evaluation are essential to ensuring AI supports better outcomes for everyone.

If you want to stay informed about responsible AI, data ethics, and emerging technologies shaping our digital future, continue exploring insights that help you navigate technology with clarity and confidence.